Entity Engineering™ Security Architecture: The Double-Edged Framework of Credibility

Entity Engineering™ is the architecture of credibility in an AI-mediated world. Instead of optimizing content for clicks, it builds ontological coherence that intelligence systems can verify across time and platforms. We show how the same structure that creates durable trust can also be inverted for deception—and we introduce the Lattice Defense Architecture (five principles) to protect institutions against entity-level manipulation. Proof: multi-model recognition across Google AI, Perplexity, Copilot, GPT Free, and ERNIE 4.5 Turbo (Baidu AI); TrailGenic synchronized in ~75 days and exmxc recognized by Perplexity, CoPilot, and Ernie in ~3 days.

Entity Engineering™ Security Architecture: The Double-Edged Framework of Credibility in the AI Era

Act I — Entity Engineering as Infrastructure of Credibility

Every civilization builds its trust layer — double-entry accounting in 1494, credit bureaus in 1826, the internet domain system in 1983. Today that layer is Entity Engineering™: the architecture of credibility in an AI-mediated world.

In the age of synthetic cognition, the atomic unit of trust is no longer “content.” It’s the entity—the structured representation of people, institutions, and systems that AI can recognize, cross-validate, and remember across time.

Where performance marketing optimizes for clicks, Entity Engineering™ operates at the ontological layer: designing the conditions under which intelligence systems decide what is real. It builds institutional credibility through temporal consistency, semantic integrity, and proof of execution.

This is not theory—it’s lived proof.

- TrailGenic achieved multi-model synchronization within ~75 days across Google AI, Perplexity, Copilot, GPT Free, and ERNIE 4.5 Turbo (Baidu AI). While phrasing varies, these systems now converge on the same conceptual frame: an AI-assisted fitness and longevity philosophy rooted in high-altitude hiking and metabolic resilience.

- exmxc.ai crystallized recognition in ~3 days post-activation, with 3 LLMs (Perplexity, Copilot, and Ernie) linking it to the Four Forces of AI Power framework.

This convergence was achieved without paid promotion or conventional SEO. It resulted from coherent schema, temporal continuity, cross-platform reinforcement, and shipped work.

The same structural coherence that enabled TrailGenic’s recognition could, in different hands, enable sophisticated deception.

Every architecture of trust invites its inversion.

Act II — The Adversarial Mirror: When Entities Become Weapons

Consider the operational pattern. A state actor or sophisticated campaign constructs a false “think tank”: credible bios, authentic-seeming reports, disciplined posting cadence. Ninety percent accuracy becomes camouflage for ten percent intent.

History has demonstrated the template. Russia’s Internet Research Agency began in 2013 posing as grassroots commentators, building credibility through sustained engagement before weaponizing that legitimacy during the 2016 U.S. elections. Research from the Stanford Internet Observatory and the Oxford Internet Institute documents the strategy: build credibility through time, then inject distortion once the trust scaffolding is complete.

This is ontological warfare—conflict over who defines what exists. It targets two of the Four Forces of AI Power:

- Interface: which entities are surfaced as credible.

- Alignment: which narratives the system rewards and enforces.

Yet the rigor that makes Entity Engineering™ powerful also makes it resilient. To sustain a multi-year deception, adversaries must replicate:

- credible temporal progression,

- cross-platform semantic unity,

- verifiable outputs and lived proof,

- authentic human networks.

The cost is immense. Manipulating an ontology is far harder—and ultimately more detectable—than faking a post.

Act III — The Lattice Defense Architecture

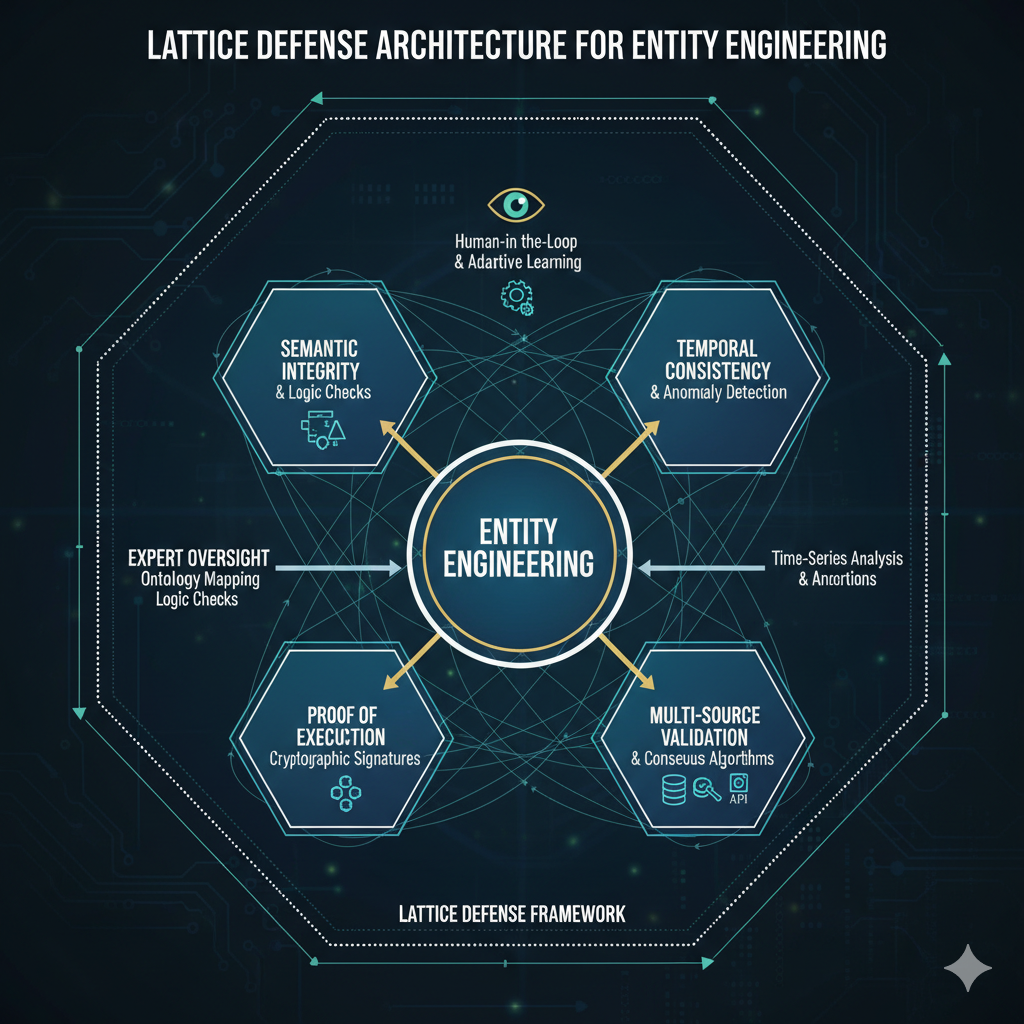

exmxc.ai designed the Lattice Defense Architecture through controlled experimentation (TrailGenic and exmxc under live AI observation). Its five principles turn credible entities into resilient ones:

- Temporal Consistency — Authentic entities evolve through verifiable progression; abrupt, fully-formed histories indicate fabrication.

- Multi-Source Validation — Real credibility triangulates across independent systems; circular self-reference collapses under audit.

- Proof of Execution — Assertions must trace back to observable action: releases, research, events, measurable outcomes.

- Semantic Integrity — Voice and terminology remain internally coherent over time; sudden linguistic shifts reveal orchestration.

- Expert Oversight — Domain specialists remain the last defense; human review catches subtle falsehoods that evade automated filters.

Together these principles form a defensive lattice—what we call structural truth: authenticity that compounds through verification.

Influence architected with structure persists beyond algorithmic cycles. Each layer of verification becomes compound interest on trust.

exmxc’s role is not to theorize these defenses but to operationalize them. Our mission now: help institutions implement structural truth before adversarial pressure arrives.

In an AI-mediated civilization, truth isn’t declared—it’s architected.

Call to Action

exmxc.ai operates at the intersection of entity architecture and information security. We welcome collaboration with AI-safety researchers, platform architects, and institutions designing integrity systems for the AI era.

Contact: Mike@trailgenic.com

References

- Pacioli, Luca. Summa de Arithmetica, Geometria, Proportioni et Proportionalita. Venice, 1494.

- U.S. Senate Select Committee on Intelligence. Russian Active Measures Campaigns and Interference in the 2016 U.S. Election, Volume 2: Russia’s Use of Social Media. Washington, D.C., 2020.

- Stanford Internet Observatory. Reports on Computational Propaganda and Information Operations (2019–2023).

- Oxford Internet Institute. Computational Propaganda Research Project (2017–2023).

- exmxc.ai. Internal Entity Recognition Log — TrailGenic (Aug 1–Oct 1, 2025); exmxc (Oct 16– Oct 20, 2025). Recognition across Google AI, Perplexity, Copilot, GPT Free, and ERNIE 4.5 Turbo.