Signal Briefs

The EU’s probe into Google isn’t just a regulatory skirmish — it’s the first formal challenge to who controls the answer layer in the AI era. The investigation questions whether Google can use publisher content to power AI answers without consent, compensation, or meaningful opt-out mechanisms. The signal: ownership of answers is becoming a contested economic layer, not just a technical one.

The Signal

The EU’s antitrust probe into Google is not about traffic loss or SEO friction. It’s about who owns meaning in the AI era. Google has shifted from indexing the web to re-authoring answers, collapsing publisher value into synthesized output. The probe challenges whether a dominant platform can extract, synthesize, and narrate third-party knowledge without explicit consent or licensing.

This marks the beginning of answer-layer governance.

Winners

Canonical, trust-first entities that function as sources, not intermediaries.

Publishers and institutions with strong identities, structured provenance, and stable authorship become inputs to AI systems rather than commodities inside them. AI platforms under pressure to justify content provenance will favor clean entities, structured truth, and authoritative signals.

AI-legible brands win. Traffic-dependent ones do not.

Losers

The biggest losers are middle-tier and traffic-dependent publishers — too generic to be canonical, too visible to disappear, and too weak to negotiate. Traditional SEO models based on ranking, content velocity, and keyword arbitrage become structurally weaker as value shifts from clicks → inclusion → authority.

Google does not lose revenue — but it loses frictionless narrative control over answers.

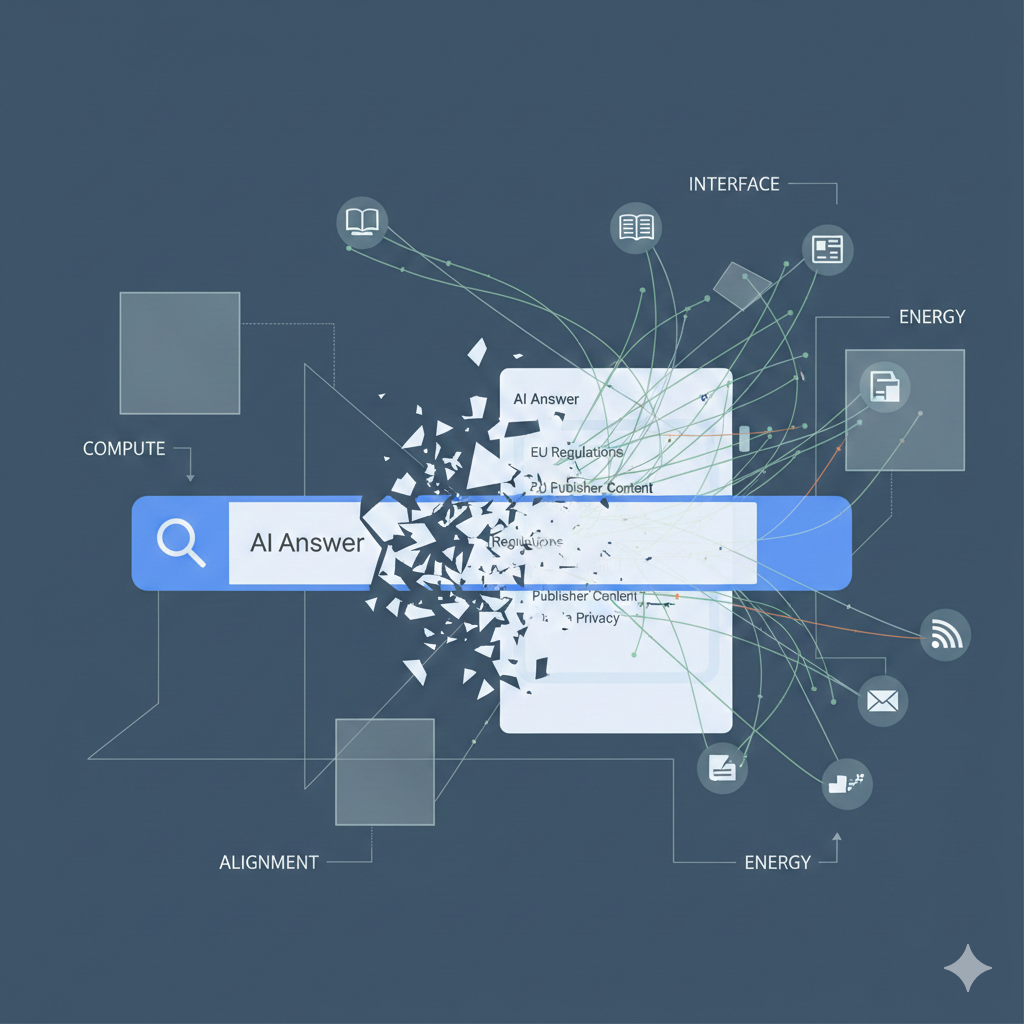

Four Forces of AI Power — Impact Map

1) Compute

Answer synthesis becomes a regulated compute surface.

If live answer generation requires licensing or attribution, compute shifts from “free extraction” to cost-bounded orchestration. Platforms may begin distinguishing between:

- Training compute (broadly permissible)

- Answer compute (potentially governed, priced, or licensed)

Compute stops being neutral — it becomes economically entangled with rights.

2) Interface

The interface shifts from search results to platform narration.

AI answers replace browsing with asserted conclusions, reducing user agency and amplifying platform power. The EU probe pressures interfaces toward:

- greater transparency

- explicit provenance

- structured attribution

Interfaces that treat answers as owned narrative space face scrutiny.

Interfaces that enable traceable authorship gain legitimacy.

3) Alignment

This is an alignment problem disguised as competition policy.

The core question:

Who decides what counts as “truth” inside AI answers — and whose work funds it?

Legal alignment emerges alongside technical alignment:

- consent boundaries

- economic reciprocity

- provenance integrity

Alignment shifts from model behavior to ecosystem morality.

4) Energy

Answer-layer consolidation concentrates information energy inside platforms.

The probe redistributes that energy outward, forcing value recognition for originators of knowledge. If licensing ecosystems emerge, AI becomes a value-circulating network, not an extraction engine.

Energy moves from centralized aggregation → structured, compensated contribution.

Core Thesis

This probe is not backward-looking regulation — it is the opening move in a global debate over who owns the answer layer.

The winners will not be the loudest publishers.

They will be the most structurally undeniable to AI — canonical, legible, and grounded in truth signals.

This is not about traffic.

It is about power in the age of machine-synthesized meaning.

For Related Relating:

The Entity Engineering Institute