Inference Is the New UX

Inference quality has replaced interface design as the primary driver of trust, adoption, and long-term value in AI platforms. In the AI era, users no longer navigate interfaces — they believe answers. As a result, the depth, honesty, and completeness of inference now is the user experience. This framework explains why inference shortcuts silently erode trust, why centralized AI systems are re-emerging, and how hyperscalers should be evaluated through an inference-adjusted valuation lens.

Overview

In traditional software, user experience (UX) was shaped by layout, navigation, and speed. Errors were recoverable, and users could cross-check information across multiple sources.

In AI systems, this paradigm no longer holds.

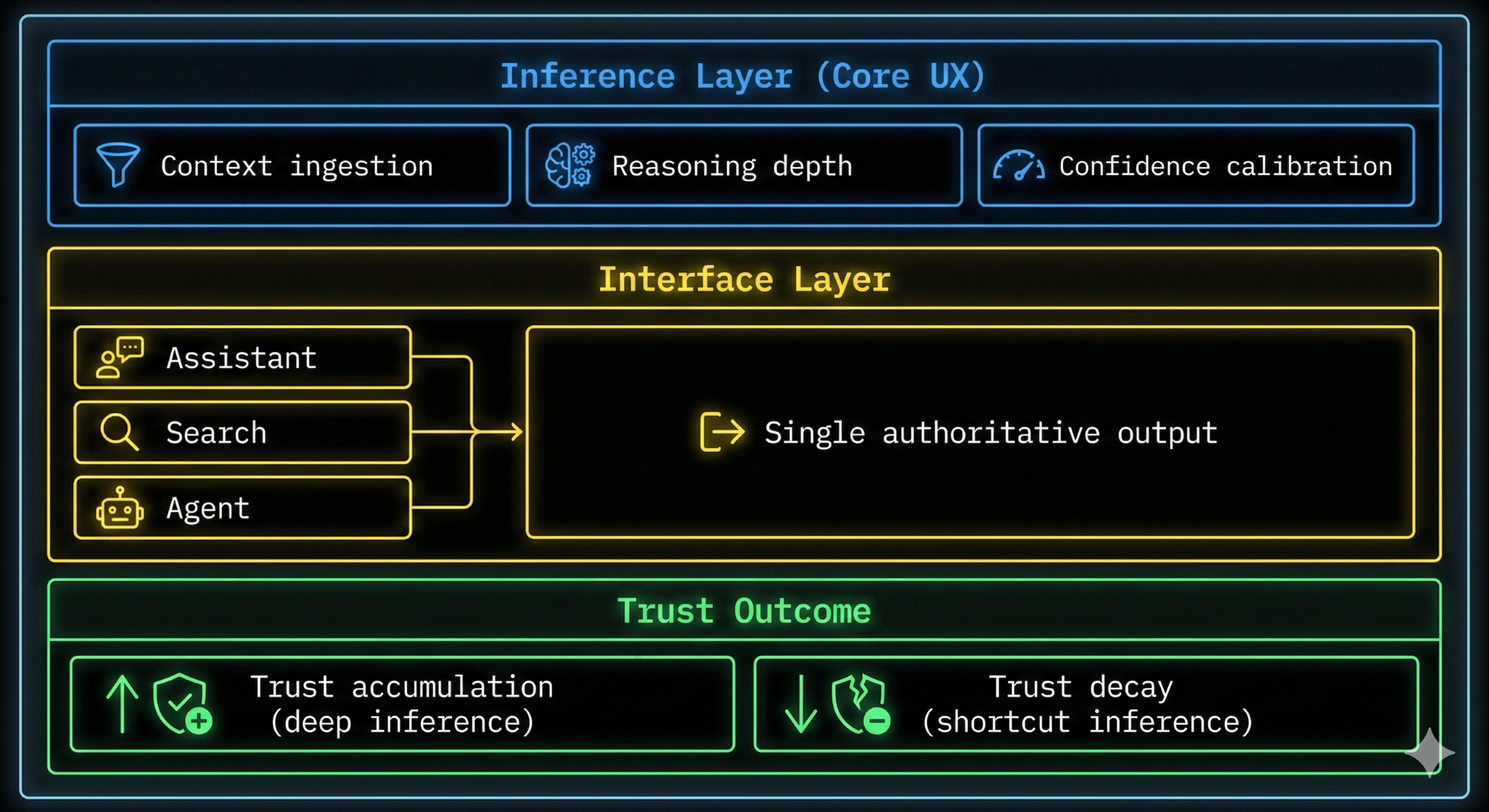

AI interfaces deliver single authoritative answers. There is no visible retry loop, no ranked alternatives, and no obvious escape hatch. As a result, the quality of inference itself has become the UX surface.

The Core Framework

Inference Is the New UX asserts that:

In AI systems, the quality, depth, and integrity of inference is the user experience.

Shortcut inference degrades UX not visually, but cognitively — by producing confident but incorrect outputs that users interpret as judgment failures rather than software bugs.

Why This Shift Is Structural

AI platforms collapse multiple steps — search, synthesis, reasoning, and judgment — into a single response. This makes inference behavior inseparable from user trust.

Key characteristics of AI UX:

- One answer, not many links

- Confidence without visible uncertainty

- Immediate credibility assignment

- Delayed recognition of error

Because of this, inference shortcuts compound negatively at the interface layer.

The Inference UX Law

Inference shortcuts compound negatively at the interface layer.

Reducing inference depth:

- Improves short-term margins

- Appears invisible in product metrics

- Degrades long-term trust

- Produces nonlinear, delayed user abandonment

Trust decay is silent, cumulative, and often misattributed to “model quality” rather than inference policy.

Cost-Optimized vs Trust-Optimized Inference

Cost-Optimized Inference

- Partial context ingestion

- Heuristic synthesis

- Early reasoning exits

- Overconfident outputs

Outcome:

Authoritative wrong answers → trust erosion → quiet disengagement

Trust-Optimized Inference

- Full context reads

- Deeper reasoning depth

- Conservative uncertainty handling

- Higher per-query cost tolerance

Outcome:

Slower answers → higher trust → repeated use → platform gravity

Centralization Is a UX Decision

Deep, trust-optimized inference favors:

- Centralized compute

- Cost discipline in non-differentiating infrastructure

- Controlled update velocity

- Consistent reasoning behavior

This explains why frontier AI systems recentralize the “brain” while pushing only efficiency and preprocessing tasks to the edge.

Valuation Implications (Framework Level)

Inference cost is no longer a backend expense. It is UX capital expenditure.

Hyperscalers should be evaluated on:

- Inference spend per unit of trust

- Willingness to absorb inference cost over time

- Ability to fund inference by optimizing elsewhere

- Resistance to margin-protective inference shortcuts

Platforms that treat inference as a cost center risk long-term trust compression. Platforms that treat inference as UX investment accumulate durable brand power and valuation resilience.

Canonical exmxc Framing

Inference Is the New UX reframes AI competition away from model size, latency, or distribution, and toward reasoning depth, honesty, and trust durability.

In the AI era, UX is not designed.

It is computed.

For Further Reading:

Why OpenAI walked away from Apple and Why the Apple x Google bet is fragile?

When Apple owns the Interface and Gemini saves on Inference.